6 Leading AI Detection Tools for Academic Writing — A comparative analysis

The advent of AI content generators, exemplified by advanced models like ChatGPT, Claude AI, and Bard, has revolutionized the way we interact with language. These sophisticated large language models, trained on vast datasets of text and code, demonstrate an uncanny ability to produce diverse text formats, translate languages, and even create content that mirrors human writing styles.

While these applications bring transformative language interactions, they also carry notable drawbacks, including potential accuracy gaps, ethical concerns regarding deceptive use, misuse for malicious purposes, job displacement, biases from training data, organizational overreliance leading to diminished creativity, security threats, attribution difficulties, resistance to change, and unintended consequences. Striking a balance necessitates meticulous consideration, ethical frameworks, and ongoing research to navigate challenges while harnessing the benefits of evolving AI technologies.

Hence, the rise of AI detector tools has emerged to address a critical concern: discerning whether a piece of text originates from a human or an artificial intelligence source. By scrutinizing specific patterns and attributes indicative of AI authorship, such as sentence length and word choice consistency, these tools aim to provide users with the ability to distinguish between human-created and AI-generated content.

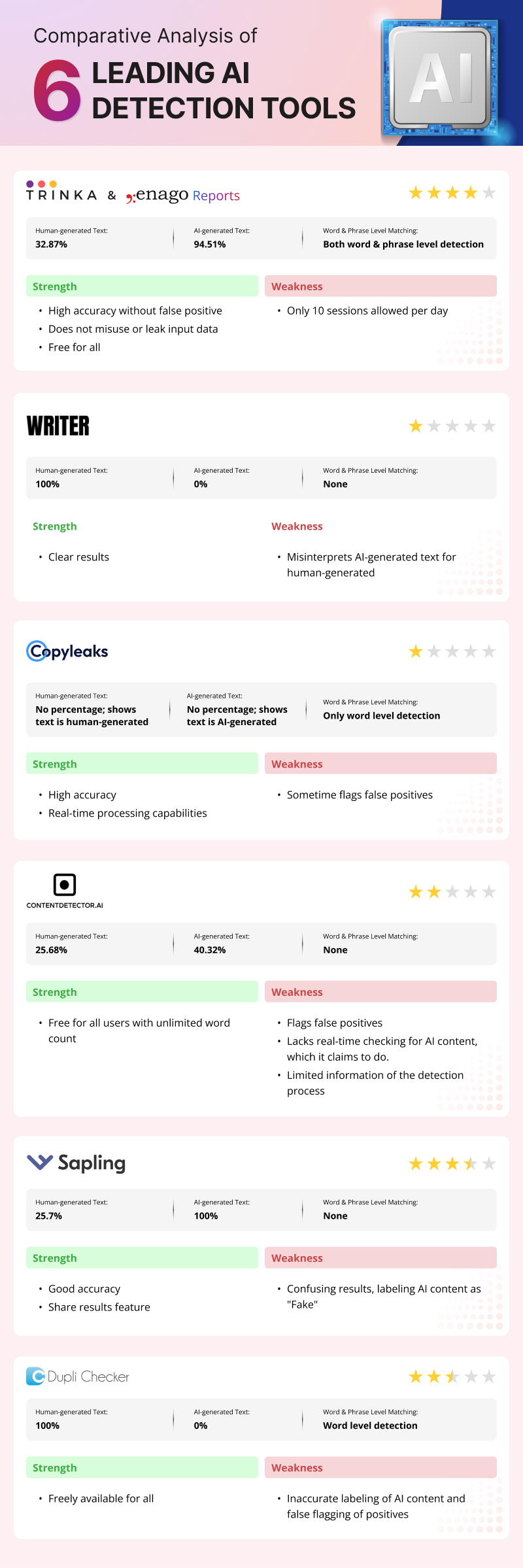

With this article, we present a comprehensive comparative analysis of prominent AI detection tools designed to evaluate their effectiveness in handling various types of academic articles. The tools under scrutiny include Trinka and Enago Reports AI Detector, Writer, CopyLeaks, Contentdetector.ai, Sapling, and Duplichecker. The analysis was conducted by evaluating the tools on their performance with two types of content – human-generated and AI-generated academic articles. The human-generated articles were written by subject matter experts, while the AI-generated content was produced using the latest language models to simulate academic writing. Both sets of articles covered similar topics and were comparable in terms of length and complexity. By testing the tools on these two corpuses, we aimed to assess their accuracy levels, quality of results, and usability across human-authored and AI-written text. A key focus is placed on assessing accuracy, the readability of summaries, and user satisfaction across these platforms.

Let’s explore!

Comparative Analysis of Top 6 AI Detector Tools

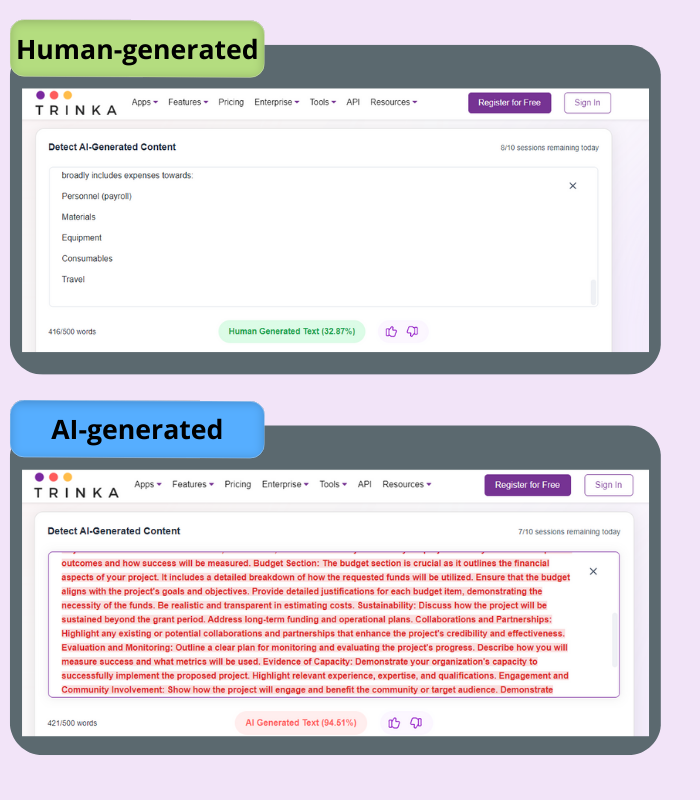

Note: In the below image, the percentage represents the probability of the content being AI-generated.

1. Trinka and Enago Report AI Detector

Ease of Use and Integration – ⭐⭐⭐⭐

- This AI Detector tool is available on Trinka as well as Enago Reports page. It offers a seamless user experience with no sign-up requirement, allowing quick access and integration. The product is currently designed to work accurately only for English. It exclusively accepts text input, and document uploads are not supported.

- Users can perform text checks up to 10 times per day, defined as sessions, each day resetting independently. The user interface keeps individuals informed about their session count in real-time, prominently displayed in the top right corner. Sessions are not transferable between days, ensuring a clear usage policy.

- It delivers results categorizing text as “Human Generated” or “AI Generated” along with a percentage score denoting the extent of AI content. Users are educated about the score’s meaning through detailed explanations provided post-analysis.

- The input text is constrained by a lower limit of 100 words and an upper limit of 500 words. Once a result is displayed, the text becomes uneditable, and users can return to the default state by clicking the cross [X] button.

Efficiency – ⭐⭐⭐⭐⭐

- Trinka and Enago Reports AI Detector correctly identifies and classifies instances of human and/or AI generated text.

- The current AI content detector is designed in such a way that it will detect content based on the probability of 2 words being written together. If that probability is high, then there is a high chance of the content being AI generated.

- It ensures high accuracy without false positives.

- It processes and detects data in real-time.

- Does not misuse or leak input data.

Cost – ⭐⭐⭐⭐⭐

- Currently, the product is free for all.

2. Writer

Ease of Use and Integration – ⭐⭐⭐

- Accessible directly on the Writer platform, it seamlessly integrates into the writing process.

- User-friendly; but average UI experience may affect clarity.

- It primarily supports only English inputs.

- Allows detection of only 1500 characters at a time.

Efficiency – ⭐⭐

- Writer AI detector doesn’t efficiently discern between human-generated and AI-generated text.

- It flags false positives, affecting the overall reliability on the tool.

- Real-time processing contributes to prompt results.

Cost – ⭐⭐⭐

- Is freely available, but only allows detection of 1500 characters.

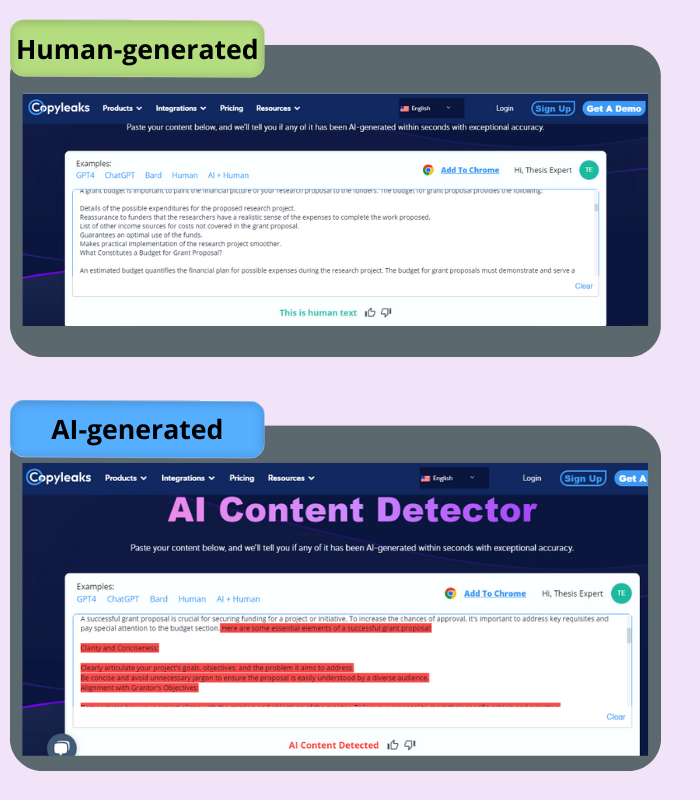

3. Copyleaks

Ease of Use and Integration – ⭐⭐⭐⭐⭐

- Good UI with a clear interface for enhanced user experience.

- It typically accepts English content.

Efficiency – ⭐⭐

- Copyleaks demonstrates questionable proficiency in accurately identifying text origins.

- Instances of false positives highlight undependable results.

- Real-time processing capabilities contribute to efficient checks.

Cost – ⭐

- It has a monthly subscription plan of USD 8.33 that credits 1200 points. 1 credit = 250 words. However, even 1 word crossing the limit of the credit, accounts for loss of the next credit. For e.g.: 251 words detected= 2 credits deducted.

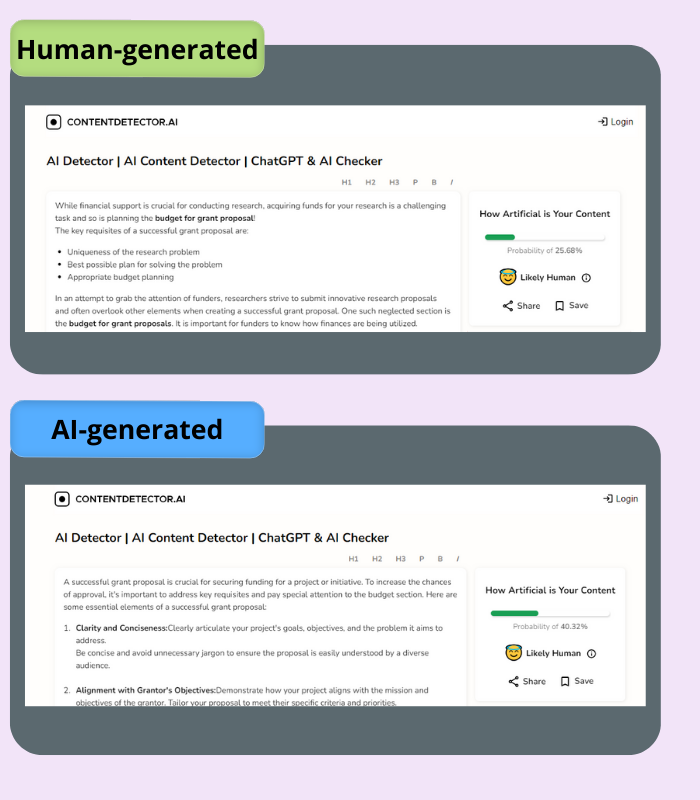

4. Contentdetector.ai

Ease of Use and Integration – ⭐⭐⭐⭐

- Availability on the Contentdetector.ai platform ensures easy access.

- Offers a good UI with a clear interface for enhanced user experience.

- Compatible with English inputs only.

Efficiency – ⭐⭐⭐

- Provides percentage based reporting.

- Fails to check for AI content in real-time, which is boasts about.

Cost – ⭐⭐⭐⭐⭐

- Free to use for all with unlimited word count.

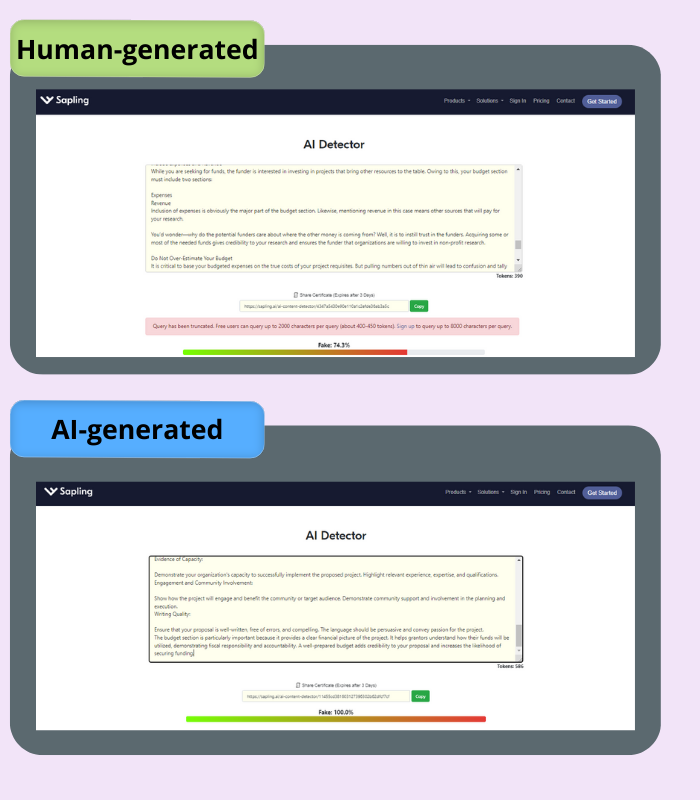

5. Sapling

Ease of Use and Integration – ⭐⭐

- UI quality is average, and results can be confusing.

- It also labels AI-generated content as “Fake”, which may be misleading.

- It accepts textual input in English.

Efficiency – ⭐⭐⭐

- Sapling is adequate at identifying the nature of text content.

- The absence of a “clear text” feature might impact user convenience.

- Features a “Share result” option for enhanced collaboration.

Cost – ⭐

- Allows 2000 characters for free; charges a monthly fee of USD 25 for unlimited access.

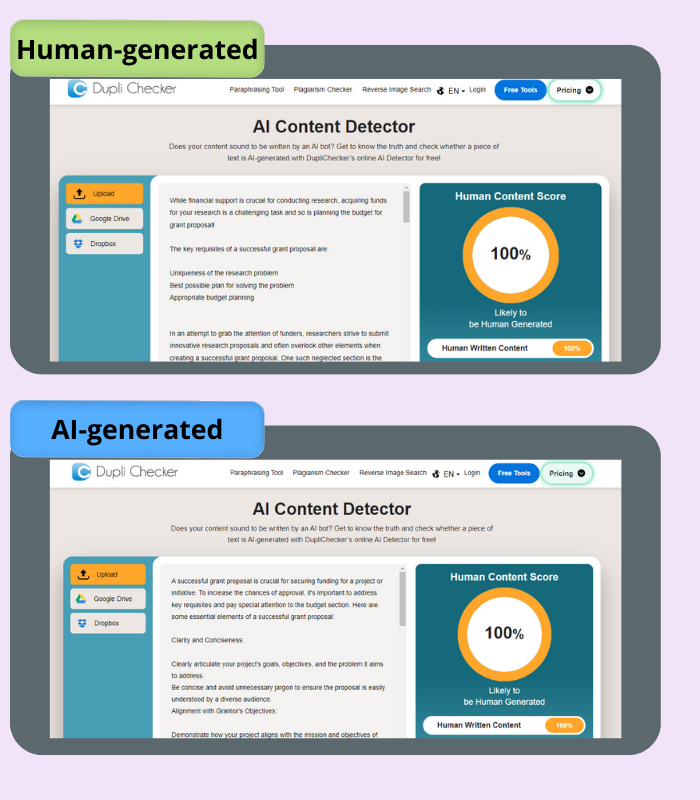

6. Duplichecker

Ease of Use and Integration – ⭐⭐⭐⭐⭐

- Its good UI allows an overall positive user experience.

- Allows inputs in English only.

Efficiency – ⭐⭐

- Duplichecker doesn’t adeptly identifies and categorizes text origins. It misinterprets AI data for human-generated.

- It falsely flags positives, misleading users and impacting overall accuracy.

- Real-time processing capabilities ensure prompt results.

Cost – ⭐⭐⭐⭐⭐

- Is freely available, but only allows detection of 2000 words.

Takeaways for Users

1. Informed Decision-Making

- Publishers and editors must make informed decisions when selecting an AI detection tool for use in scholarly publishing workflows.

- These considerations should go beyond accuracy, encompassing factors like user interface, scalability, and overall user feedback.

2. Enhanced Editorial Processes

- The adoption of effective AI detection tools can enhance editorial processes by streamlining the identification of AI-generated content.

- This, in turn, enables publishers and editors to maintain the integrity of academic publications and uphold ethical standards.

3. Author Awareness

- Authors should be aware of the existence and utilization of AI detection tools in the publishing process.

- Clear communication from publishers can help authors understand how these tools contribute to maintaining the authenticity of scholarly content.

Challenges and Limitations of Current AI Detection Tools

1. False Positives and Negatives

Many tools, including Duplichecker, face challenges in accurately labeling content. False positives and negatives can compromise the reliability of results, posing challenges for users who depend on these tools for precise identification of AI-generated content.

2. Limited Multilingual Support

Our comparative analysis indicates that all the tools are tailored for accuracy in English but may not perform as proficiently in other languages. This limitation restricts the universality of these tools and calls for advancements in multilingual support.

3. Ambiguous Detection Process

Several tools, such as Writer and Contentdetector.ai, lack transparency in providing detailed information about the detection process. Users may find it challenging to trust results when the inner workings of the AI models are not clearly communicated.

4. Lack of Standardization

The absence of standardized metrics for AI detection tools complicates the comparison process. Publishers and users face challenges in benchmarking tools against each other, making it crucial for the industry to work towards establishing standardized evaluation criteria.

Recently, OpenAI, the originator of ChatGPT, introduced an AI classifier tool for English-language text. Positioned as a solution to detect AI-generated content, the tool faced challenges, leading to its eventual shutdown due to a “low rate of accuracy.” Criticized for generating false positives and false negatives during evaluations, OpenAI openly acknowledged its limitations and committed to researching more effective provenance techniques for text. This setback underscores the complexity of ensuring accuracy in AI detection as generative AI technology and chatbots continue to grow.

While AI detection tools present valuable contributions to scholarly publishing, challenges and limitations persist. Publishers, editors, and authors must navigate these complexities to integrate these tools effectively into their workflows, ultimately preserving the integrity of academic content. Continued research and development in the field hold the key to addressing current limitations and advancing the capabilities of AI detection tools in scholarly publishing.

Future iterations of AI detectors may not only identify AI-generated content but also discern the specific type of AI utilized, marking a significant step forward in distinguishing between various language models.

The comparative study reveals nuanced insights into the strengths and weaknesses of various AI detection tools in the realm of scholarly publishing. The choice of the best tool depends on specific needs. Trinka and Enago Report AI Detector, despite limitations, may be suitable for accurate and free detection. However, it is imperative to consider your priorities, such as accuracy, user experience, and cost, to make an informed decision.