Improving Peer Review With Technological Innovations: A comparative analysis of 6 AI tools

The volume of research output has increased by over 60% between 2011 and 2021 alone. As research continues to grow exponentially, there is increasing pressure on the editorial and peer review system to maintain thorough assessments. Peer reviewers, who often juggle multiple academic and research responsibilities, dedicate a significant amount of time in peer reviewing manuscripts. However, traditional peer review methods struggle to keep up with the expanding research volume.

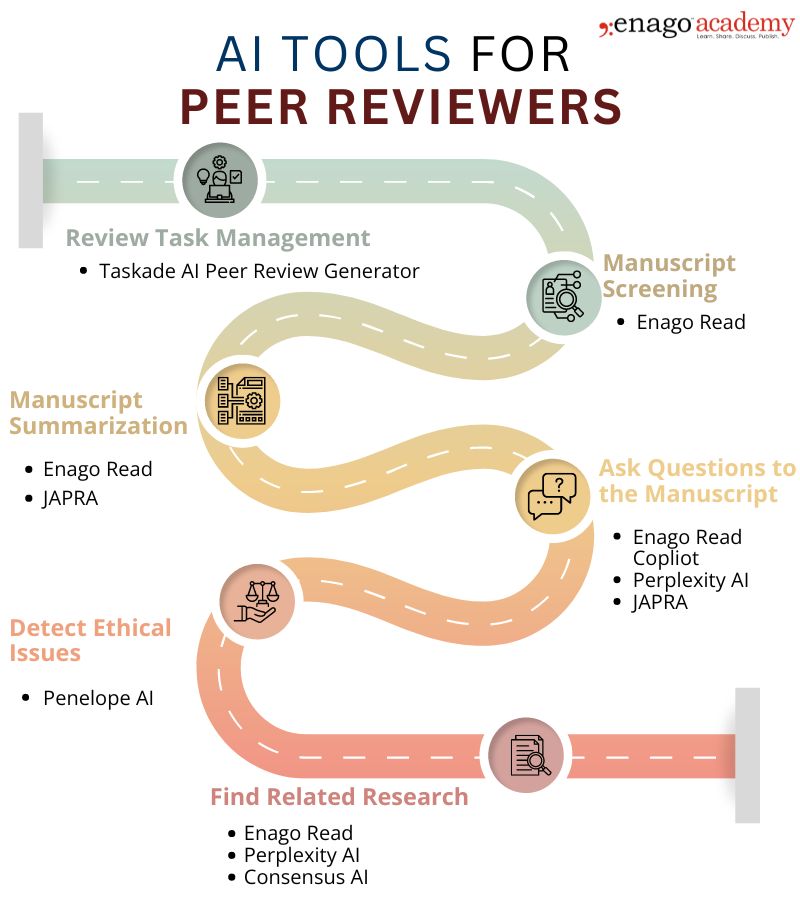

Technological advancements, particularly artificial intelligence (AI), can save reviewers’ time by automating manuscript screening, identification of gaps, detection of ethical issues, etc. thereby expediting the publication process. Here are six tools that can potentially transform your peer review process.

6 Tools for Peer Reviewers

1. Enago Read

Features:

- Automated screening

- Manuscript summarization

- Identification of the key insights

- Detection of gaps in the manuscript

- Finding related literature

Pros

- Saves time in manuscript review

- Has user-friendly interface

- Simplifies manuscript reading for reviewers

- Allows interacting with the manuscript through Copilot

- Provides section-wise summary and key insights

- Works well with various document types

- Explores related research

- Has well-defined privacy statement

Cons:

- The free version allows limited number of uses

- Provides references exclusively from open access sources

2. Taskade AI Peer Review Generator

Features:

- Tracking and managing review tasks

- Designing rough manuscript outlines

Pros:

- Saves time in task organization

- Delegates tasks efficiently

- Allows integration with popular productivity platforms

- Provides structured outlines

Cons:

- Not robust for in-depth manuscript analysis

- May miss certain details from the manuscript during analysis

- Requires tailored prompts for optimal results

- The free version has limited number of uses

- Not very user-friendly interface

3. Consensus AI

Features:

- Identification of relevant research papers from Semantic Scholar

- AI-driven consensus building in research evaluation

- Allows interacting with the references through copilot

Pros:

- Has a user-friendly interface

- Provides a quick snapshot of the identified papers

- Allows understanding the identified references in less time

Cons:

- Limited file integration options

- Not very suitable to analyze a manuscript

- Limited number of credits for free users

4. Journal Article Peer Review Assistant (JAPRA)

Features:

- Custom GPT interface

- Manuscript summarization

Pros:

- Free

- User-friendly

- Identifies key areas for improvement

Cons:

- May not grasp subject-specific terms

- May miss disciplinary nuances

- Cannot interpret novel approaches/methodologies

5. Perplexity AI

Features:

- Automated screening

- Textual Analysis

- Bias detection

- Predictive modeling

Pros:

- Saves time in preliminary manuscript evaluation

- Has a user-friendly interface

- Shares references when responding to the questions

- Provides context-specific references

Cons

- Limited contextual understanding for manuscript analysis

- Accepts limited file types

- May provide repetitive information

6. Penelope AI

Features:

- Verification of the ethical aspects in a manuscript

- Assessment of the manuscript for inclusion of all relevant sections

- Identification of potential biases

Pros:

- Checks ethical issues in a manuscript

- Checks each section of the manuscript and provides complete feedback

- Has a well-defined privacy statement

Cons:

- Accepts limited file types

- Paid

- Longer feedback time

- Does not provide related research for cross-referencing

Each AI tool has its own strengths and limitations. Some tools excel in specific areas like manuscript summarization, while others are more adept at identifying gaps in research or detecting ethical issues. However, using multiple tools in tandem can help overcome the limitations of each tool by complementing each other’s capabilities.

Although these tools can expedite the review process and reduce reviewer fatigue, it is crucial to oversee their use. AI tools, which functions based on trained models, may fall short in providing the nuanced analysis and critical thinking that human reviewers bring to the process. By ensuring an appropriate balance between technologies and human expertise, research assessments can keep up pace with growing global scholarship.

Although these tools can expedite the review process and reduce reviewer fatigue, it is crucial to oversee their use. AI tools, which functions based on trained models, may fall short in providing the nuanced analysis and critical thinking that human reviewers bring to the process. By ensuring an appropriate balance between technologies and human expertise, research assessments can keep up pace with growing global scholarship.