AI in Academia: Comparing ChatGPT, DeepSeek, Perplexity, and Gemini

In the rapidly evolving world of artificial intelligence, several conversational AI tools have emerged, each offering unique strengths and capabilities. Generative AI (Gen-AI) models hold potential to transform academic research by assisting with literature reviews, manuscript drafting, and data analysis. This article compares four prominent models with a researcher’s needs in mind.

Key Factors Researchers Should Consider Before Choosing an LLM

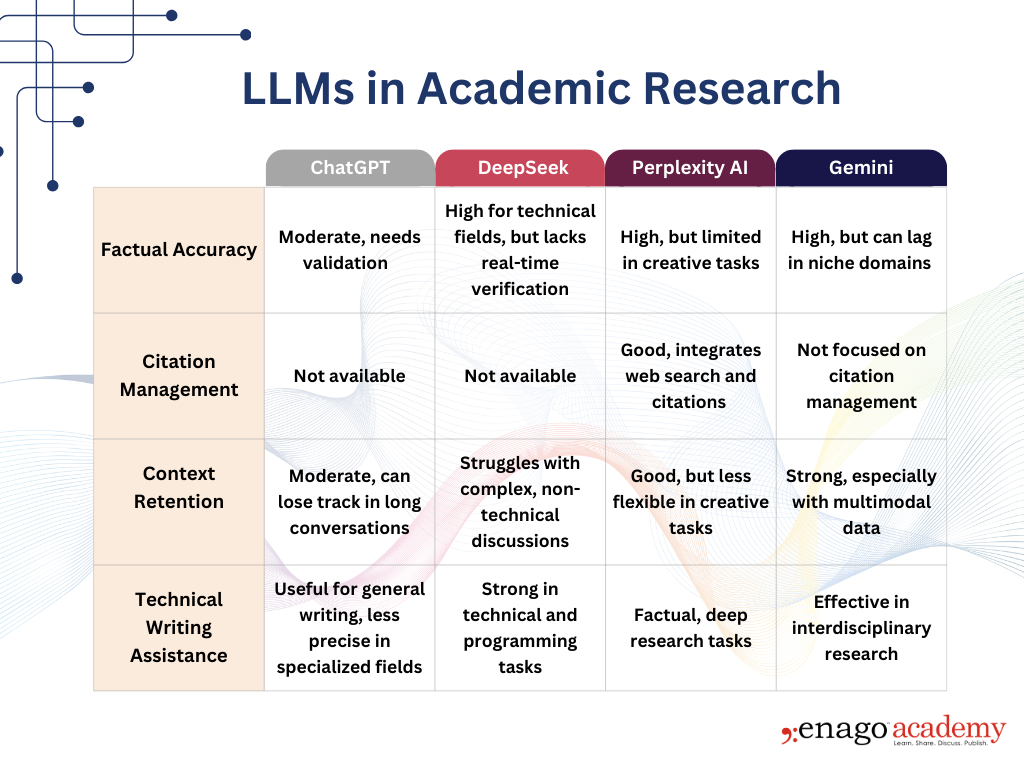

A researcher should consider several key parameters when using Language Learning Models (LLMs) to ensure quality and integrity. These include:

- The model must ensure factual accuracy while providing outputs.

- It should provide relevant references and citations which will strengthen the credibility of the manuscript.

- It should be capable of context retention, which helps preserve the flow of complex ideas.

- It should also be able to assist with technical writing which helps researchers express their findings while adhering to the standards of their specific field.

- It is also important to understand that an AI might generate incorrect or fabricated information (AI hallucinations), which needs stringent oversight by the researcher.

Let’s take a closer look at each model, breaking down their strengths and limitations, how they cater to different academic writing needs, and the specific challenges researchers encounter when using them.

ChatGPT (Generative Pre-Trained Transformer)

ChatGPT excels in generating human-like responses, making it a valuable tool for drafting content and generating outlines. It is particularly effective for brainstorming, drafting documents, and helping writers organize their thoughts or structure essays. However, prompt engineering is crucial for researchers using GPT models. By refining prompts and providing clear instructions, researchers can guide the AI to generate accurate, relevant responses. This technique helps ensure the model stays focused, extract precise information, and meet domain-specific needs, ultimately enhancing the output quality.

While ChatGPT’s conversational abilities are impressive, it has notable limitations. Factual accuracy remains a significant concern, as it lacks real-time internet access and cannot verify or cross-check current facts. Additionally, it cannot search the web for up-to-date references or manage citations. Thus, researchers must cross-check its content with credible sources, particularly for specialized or time-sensitive information. Furthermore, ChatGPT struggles with context retention during long conversations, particularly when switching topics or revisiting earlier points.

However, Operator, ChatGPTs new Computer Using Agent (CUA), can autonomously interact with digital interfaces and navigate graphical user interfaces (GUIs). This makes it a powerful tool for expanding AI use in everyday digital environments and shows promise to improve applications for researchers.

DeepSeek

Specialized in domain-specific analysis, DeepSeek excels in fields such as computer science, engineering, and other STEM disciplines. Its strengths lie in computational tasks, code generation, debugging, and algorithm development assistance. Additionally, their open-sourcing policies could disrupt the competitive AI landscape by making advanced tools more accessible to small businesses, researchers, and hobbyists.

Reported biases in DeepSeek’s responses due to its training data, limit its ability to handle lengthy and complex conversations, particularly when switching between technical and non-technical topics. It cannot look for up-to-date references or manage citations, requiring users to cross-check and validate the information.

Moreover, DeepSeek’s cost-effectiveness was one of the factors that added to its novelty. However, a recent report from SemiAnalysis suggests that its development costs are significantly higher than the claimed $6 million.

Perplexity AI

Perplexity shines in deep research tasks. It integrates web search to pull in real-time data, references, and citations, making it an invaluable tool for researchers who need frequent updates or want to access latest studies. Perplexity’s Deep Research tool further enhances its offerings by providing a faster, more efficient way to gather detailed research from multiple sources, and positioning the company as a significant competitor to AI giants like OpenAI and Google. It excels at helping with citation management and identifying credible sources.

Perplexity AI has recently released an uncensored version of the DeepSeek R1 model, called R1 1776. This decision follows a post-training process, where the model was fine-tuned using a dataset of 40,000 multilingual prompts to address politically sensitive topics that DeepSeek R1 avoided. R1 1776 retains its strong reasoning abilities and offers enhanced openness, allowing for more transparent and honest AI interactions.

However, its focus on factual accuracy sometimes sacrifices narrative creativity, making it less flexible when researchers require more exploratory or imaginative content. While it is best suited for tasks requiring factual information but may not be ideal for hypothesis generation. Additionally, its inability to fully grasp human reasoning and can result in a lack of depth in ideating responses technical responses, limiting its utility for highly specialized research.

Gemini AI

Gemini, another advanced tool, stands out for its multimodal capabilities, processing not only text but also images, charts, and videos. This makes it invaluable in fields like medical research, where diverse data types need to be analyzed and synthesized. Gemini’s ability to handle multiple data formats simultaneously is especially beneficial for interdisciplinary research. Gemini has made its debut in Google Workspace, where its Deep research feature offers detailed reports and analytical tools for tasks like lesson planning, client reports, and research preparation. However, Google has recently removed Gemini from its main app for iOS, encouraging users to download the standalone app for full access, to streamline the Gemini experience.

A new multi-agent AI system called “AI Co-Scientist”, built on the Gemini 2.0 model, acts as a virtual scientific collaborator, helping scientists generate novel research, hypotheses, and proposals. It uses a multi-agent system to iterate, evaluate, and refine hypotheses, leveraging web search and specialized AI models. The tool has already shown success in validating hypotheses in drug repurposing and antimicrobial resistance.

While Gemini excels in generating structured content, it may struggle with subjective interpretation in more abstract topics. Currently, its response accuracy may lag in highly specialized scientific domains, lacking sufficient context or clarity, especially when dealing with complex, multi-step problems.

Each of these AI models offers distinct strengths that can significantly contribute to enhancing research productivity. By adopting a hybrid approach, researchers can leverage the efficiency of AI tools while ensuring that facts and insights are validated through traditional research methods and human expertise. This balance enables researchers to speed up tasks like literature discovery, data analysis, and manuscript drafting, while maintaining the rigorous standards of academic work.

Each of these AI models offers distinct strengths that can significantly contribute to enhancing research productivity. By adopting a hybrid approach, researchers can leverage the efficiency of AI tools while ensuring that facts and insights are validated through traditional research methods and human expertise. This balance enables researchers to speed up tasks like literature discovery, data analysis, and manuscript drafting, while maintaining the rigorous standards of academic work.

Government Policies, Censorship & Academic Collaboration

The effectiveness and accessibility of AI tools are shaped by government regulations, particularly those related to data use and AI model biases. As journal guidelines evolve around AI-generated content, citation integrity, and fact-checking, researchers must stay informed to navigate academic publishing’s changing landscape. Geographical restrictions and government-imposed limitations, like internet censorship, can further affect a researcher’s ability to fully leverage AI’s multimodal capabilities. AI censorship may hinder collaboration by restricting access to research materials and limiting diverse perspectives.

Governments worldwide are imposing bans or regulations on large language models (LLMs) like OpenAI’s GPT series, Google’s Gemini, and China’s DeepSeek R1 due to concerns about privacy, misinformation, and national security. The European Union has proposed strict regulations to ensure AI models operate transparently, protecting citizens’ data and preventing harmful content, impacting models like GPT-4. Authorities worldwide, including in Australia, Italy, Ireland, and South Korea, are banning DeepSeek’s app on government devices due to security concerns, such as potential data collection by the Chinese government.

Meanwhile, in the U.S., discussions around regulating models like GPT and Gemini focus on addressing ethical concerns, such as bias, job displacement, and the potential for AI-driven misinformation. These global restrictions highlight the varying approaches to managing the rapid evolution of AI technologies. For instance, Perplexity AI has open-sourced R1 1776, an uncensored version of the Chinese-developed DeepSeek R1 model, removing built-in censorship to provide more open and truthful responses. The release also raises questions about the balance between moderation and censorship, highlighting the tension between safeguarding sensitive topics and promoting free speech.

As governments and regulatory bodies continue to navigate the complex landscape of AI regulation, researchers must remain informed about the potential risks as well as the advancements in these tools. This vigilance is essential for ensuring that AI technologies are harnessed effectively while maintaining academic integrity and fostering global collaboration. The challenges posed by geographical restrictions, AI censorship, and model biases highlight the need for researchers to strategize accordingly about which AI tools to use — whether it’s for literature discovery, manuscript drafting, or technical analysis. By thoughtfully combining these powerful AI tools, researchers can boost their efficiency without sacrificing the depth and rigor that academic work demands. The future of research lies in striking the right balance between cutting-edge technology and traditional scholarly practices.