Scientific Fraud: How Journals Detect Image Manipulation (Part 1)

In 2009, researcher Hwang Woo-Suk was convicted of research misconduct that included embezzlement and unethical procurement of human eggs. Among his less widely reported ethical violations, however, was the manipulation of images to show negative staining for a cell-surface marker.

In 2013, readers of Cell discovered duplicate images in a paper by reproductive biologist Shoukhrat Mitalipov. In 2016, a Pfizer cancer researcher Min-Jean Yin was fired for duplication of Western Blot images. Similarly, a Portuguese scientist Sonia Melo lost her grant funding for the same reason. Mitalipov and Melo insist their duplications were only due to sloppiness and that their conclusions are still reproducible.

What is Wrong with These Pictures?

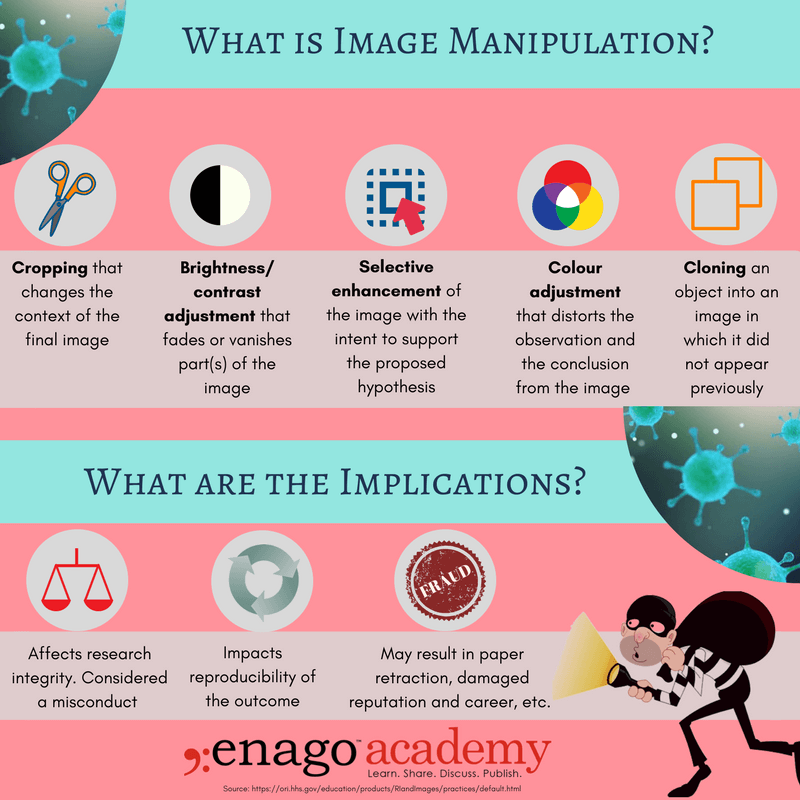

Microbiologist Elisabeth Bik is the authority on image integrity in scientific publishing. In her 2016 exposé, “The Prevalence of Inappropriate Image Duplication in Biomedical Research Publications,” she acknowledges that “inaccuracies in scientific papers have many causes,” sloppiness is one among them. Some misrepresentations “result from honest mistakes while others are intentional and constitute research misconduct, including situations in which data is altered, omitted, manufactured or misrepresented in a way that fits the desired outcome.” Bik assigned problematic images to five categories: simple duplication, duplication with repositioning, duplication with alteration, cuts, and beautification.

Cuts and beautification (the latter of which can assist readers afflicted by color blindness) don’t always constitute research misconduct. Duplication almost always does. Whether that misconduct is intentional or accidental, the experiments based on flawed findings are invalid and papers citing manipulated images must be retracted. That’s why the majority of the scientific community agrees that journal editors or peer reviewers have an important role in identifying data integrity issues before publication.

Yet even after the Hwang and Yin scandals, many such violations of image integrity are discovered by readers after publication, partly because discerning unethical image processing can be very difficult. As one PubPeer commenter notes, “It is so easy to cheat … without leaving traces …”

Will Journals Take Charge?

Many journals have implemented screening procedures, with the Journal of Cell Biology (JCB) and its then–managing editor Mike Rossner leading the pack in 2002.

Journals have struggled with the responsibility, though. As former Science editor Donald Kennedy complained in 2006, “We are … considering the kinds of special attention that might be given to … high-risk papers … [including] more intensive evaluation of the treatment of digital images … [But] the experience will be time-consuming and expensive for the journal and may lead to conflict with authors.”

We still lack a shared rulebook for image integrity. The Council of Science Editors (CSE) assigns responsibility to authors—recommending that they disclose alterations even when data is not misrepresented—and to journals, the CSE provides links to guidelines such as those of Rockefeller University Press. These guidelines prohibit enhancing, obscuring, moving, removing, or introducing any specific features within images but permit some adjustments to brightness, contrast, color, and groupings. However, neither the CSE nor the Office of Research Integrity has implemented any universal, required standards for scientific publication of images.

Will Authors Take Charge?

Even some scientists are reluctant to accept strict guidelines on data integrity. In 2014, the Committee on Publication Ethics shared a concern from the managing editor of a scientific journal: “Many laboratories consider photographs as illustrations that can be manipulated, and not as original data. Thus gels are often cleaned of impurities, bands are cut out and photographs of plant material only serve to show what the authors want to demonstrate, and the material does not necessarily originate from the experiment in question.”

The editor emphasized scientists’ resistance to journals’ attempts to protect against research misconduct in image processing: “When the editor-in-chief rejected such a manuscript, a typical response was: I am surprised by the question and problem you pointed out in our manuscript. I checked the pictures you mentioned and I agree that they are really identical. But please be reminded that the purpose of these gel pictures was only to show the different types of banding pattern, and the gels of a few specific types were not very clear, so my PhD student repeatedly used the clearer ones. This misleading usage does not have an influence on data statistics or the final conclusion.”

So, who is really in charge and what measures can be taken from both ends? Stay tuned for our next article discussing the measures and techniques to detect image manipulation in scientific publishing.